The Department of Energy has announced that a new computer to be housed at Los Alamos — named RoadRunner after the state bird of New Mexico — is the first computer in the world to break the petaflop barrier, or perform 10 15 floating operations per second.

U.S. Secretary of Energy Samuel Bodman today announced that the new Roadrunner supercomputer is the first to achieve a petaflop of sustained performance. Roadrunner will be used by the Department of Energy’s National Nuclear Security Administration (NNSA) to perform calculations that vastly improve the ability to certify that the U.S. nuclear weapons stockpile is reliable without conducting underground nuclear tests.

“This enormous accomplishment is the most recent example of how the U.S. Department of Energy’s world-renowned supercomputers are strengthening national security and advancing scientific discovery,” said Secretary Bodman. “Roadrunner will not only play a key role in maintaining the U.S. nuclear deterrent, it will also contribute to solving our global energy challenges, and open new windows of knowledge in the basic scientific research fields.”

The old record holder was the IBM BlueGene computer, which managed a paltry (by comparison) 500 teraflops.

Implications for the CTBT

All of this is really important for discussions about the CTBT. When the Senate failed to ratify the treaty in 1999, one reason was concern among the Laboratory Directors about whether necessary advances in supercomputing would materialize.

An exchange between Senator John Warner and the directors of the nation’s two nuclear weapons design laboratories captured the centrality of debates about supercomputing:

Senator John Warner: … I want to get to a very fundamental question, which each of you have answered, but not with the precision that I desire. In your best estimate, how soon can this Stockpile Stewardship program, largely dependent on computers, be up and running and performing the mission that it’s been given? You describe it as the challenge, you describe it — how you’ve done — met several milestones, but each of you have honestly said we’re not there yet. So let’s just start and go to each of the three lab directors. Give us your best estimate of when this will be up.

[snip]

John C. Browne, Director, Los Alamos National Laboratory: The computer is certainly the key element to bring all of this together. There is no doubt about that. We have moved in that path this year, and last year, toward putting in place the supercomputers on a path that we think we need to have. We are on a path that, by 2004, we will have a supercomputer in place that begins to get us into the realm of what we need to do this job.

The issue that I think you are trying to address — and this is the hardest point I think as a scientist — is that we can’t predict that by such and such a date, we will know everything we need to know. It’s an evolving process. Each year you learn something else.

[snip]

I think we are going to be in the best position some time between 2005 and 2010.

Dr. Tarter?

Bruce Tarter, Director, Lawrence Livermore National Laboratory: I would agree with Dr. Browne.

Paul Robinson, then director of Sandia National Laboratories went next, but he had concerns other than supercomputing. But, for the directors of the two design laboratories, advances in supercomputing by 2010 were crucial.

At that time, the Department of Energy openly talked about a goal of 100 teraflops by 2004 — basically two orders of magnitude more powerful than the fatests computer then operating — Sandia’s ASCI Red. Bruce Goodwin later told Nathan Hodge and Sharon Weinberger that he helped set the 100 teraflop estimate, which he thought unachievable:

I remember handing my answer in, thinking that they would kick me out of the room because it was insane at the time. It was 100 teraflops. That would be for a machine that could do one calculation only and take three months to complete it.

These concerns were central enough that the Los Angeles Times carried a story titled “Supercomputers Central to Nuke Test Ban’s Failure” (October 25, 1999).

That was then, this is now

Well, things have changed a little bit since 1999.

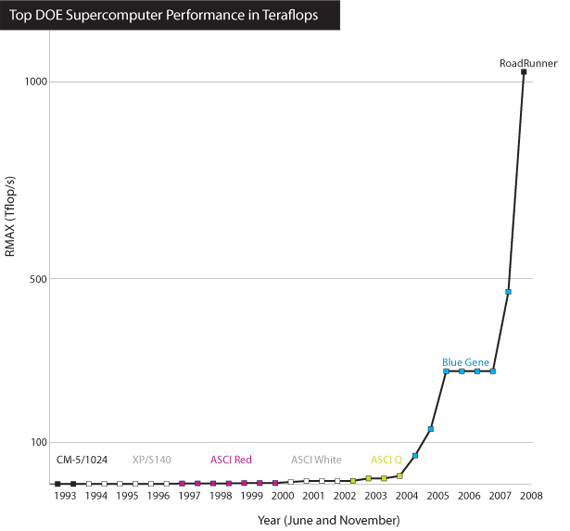

Here is a chart showing the maximum performance of the fastest computer used in nuclear weapons simulations, which not-exactly-coincidentally has also been the fastest computer in the world.

Pretty cool, huh?

DOE cruised past the “extraordinarily ambitious” 100 teraflop bechmark in early 2005, just a little behind schedule.

And now we have RoadRunner.

Just a bit of technical detail from an old semiconductor analyst….

This machine is built from a combination of IBM’s cell Processor, and AMD’s Opterons, which is essentially the same as Playstation 3s processors and what you get from an AMD server.

One of the cheapest way to build a supercomputer is to buy up Playstation 3s and start hooking them up together.

That is why there is so much scope for determined proliferators to do really good work —- the basic pieces, though not the software and test data —- are pretty easy to come by.

“Here is a chart showing the maximum performance of the fastest computer used in nuclear weapons simulations, which not-exactly-coincidentally has also been the fastest computer in the world.”

I’ve heard it said that Fort Meade measures computing power not in FLOPS but in acres.

Hmmm…incredibly powerful computer. Those fools! Don’t they realise that they’re only paving the way for the rise of SkyNet!

Bullshit*** – there is enough known about nuclear weapons that their reliability is understood. This is all about modelling new weapons.

Do the French, British, Russians Chinese, etc. plan on installing such super computers to verify the reliability of their much smaller effective armouries? The US has so many effective deliverable weapons that are effective that one or two duds don’t count unless you plan on a first strike.

BTW, this computer should have been installed to model global climate change.

The mid-1990s Blue Gene/L has about the same processing power as the Playstation 3. That’s the rate of progress… impressive!

Ooops, sorry, I meant ASCI Red, not Blue Gene/L!

Also the link on the CTBT can go the other way. The JASON report on certification of WR1, the first RRW, stated “Certification for WR1 will require new experiments,ENHANCED COMPUTATIONAL TOOLS, and improved scientific understanding of the connection

of the results from such experiments and simulations to the existing

nuclear explosive test data.”

There have been few things as predictable as the increases in computer performance over the last 40 years. This is true for almost any measure you care to use – computations per dollar, commodity processor speeds in computations per second, and maximum speed for the world’s fastest computer. All of these measures are closely linked: for many years the world’s fastest computers are built out of thousands of commodity processors. Although it is always a technical challenge to build the next “world’s fastest machine” the availability of the enabling technologies that determine its speed are easily predicted.

Look at the supercomputer performance prediction graph at:

http://www.top500.org/lists/2007/11/performance_development

(Look at the middle red line in “Projected Performance Development”.)

The speed of the fastest computer and its approximate date of availability can be predicted ten years out (it will be some 1000 petaflops in 2018).

If indeed the Laboratory Directors cited “concern … about whether necessary advances in supercomputing would materialize” as reasons to oppose the CTBT they were resorting to the least credible argument they could have come up with.

Playstations are a lot of trouble to use off the shelf because the boards they are mounted on don’t have a lot of memory or communications bandwidth relative to the size of these calculations. I am sure the main challenge for RR was designing a motherboard.

But someone riddle me this. Suppose, in 1999, the US knew it was far away from the computations to do stockpile stewardship, but they would be there in 5-10 years. Wouldn’t going to CTBT do a lot to lock in structural advantages, and not give other nations time to learn new things from testing in those 5-10 years?

Nothing to do with stockpile maintenance and everything to do with RRW.

Anyway, why all the hysteria about warhead reliability when the weakest link in the weapons’ system is the delivery — the ICBM. The current warheads are >98% reliable.

Will Roadrunner somehow help make a more reliable ICBM?

Damn the logic — full speed ahead!

12th. CEA Tera-10 53 TFLOPS – top public French super.

24th. AWE Cray XT3 33 TFLOPS – top public UK super.

I wouldn’t be at all surprised if the top Russian, Chinese, and Indian supercomputers are in similar hands.

@FSB

“the weakest link in the weapons’ system is the delivery — the ICBM.”

Um…. the weakest link is probably in the Chain of command from POTUS on down, right to the launch crews’ willingness to obey orders.

One thing that hasn’t been made clear is whether that “petaflop” number actually reflects the performance of the computer on the software it needs to run to do weapons modelling (which I assume is , in essence, very, very clever computational fluid dynamics).

If you can divide the problem up easily, you can use any collection of computers you want – frankly, a shipping container of high-end cellphones will do – to get petaflop performance (in fact, that’s what projects like Seti@Home did – divided a data analysis problem into very small chunks and farmed them out onto random computers on the internet). But not all problems are so trivial to divide up. The more you need to share intermediate results, the fancier the system to transmit data between the various “nodes” of the supercomputer has to be, and the fraction of the theoretical peak performance you actually get becomes much smaller.

Roadrunner is a bunch of commodity computers (Opterons, like in your desktop computer) hooked together with some high-end, but standardized, interconnection hardware.

The real secret sauce is writing software that can take advantage of this relatively cheap, but architecturally complex, hardware.

That should really be a log graph, you know.

Lao Tao Ren:

sure. agreed.

I was talking about things not people in the weapons delivery system.

I’m all for reliable replacement wankers in the chain of command, starting from the wanker in the white house.

One thing that hasn’t been made clear is whether that “petaflop” number actually reflects the performance of the computer on the software it needs to run to do weapons modelling (which I assume is , in essence, very, very clever computational fluid dynamics).

…

The real secret sauce is writing software that can take advantage of this relatively cheap, but architecturally complex, hardware.

The quoted performance on Roadrunner is the standard Linpack benchmark test.

Yes, it is a prerequisite that you have a computer code that uses the hardware effectively, which in turn requires that the problem to be solved be intrinsically suited to the hardware, and that the software implementation effectively maps the problem to the hardware.

Fortunately for the weapons labs and weather forecasters CFD problems are composed of a mesh of locally interacting cells that intrinsically map on to massively parallel processing (MPP) mesh computers quite well.

Converting codes to run on these machines was a big task back in the late 1990s. But the first of the DOE’s MPP mesh supercomputer, ASCI Red, went through acceptance testing in 1996 and the principal hurdles in proving the CFD codes on the mesh architecture had been cleared by 1999. And the although the actual weapon codes are secret (in all their implementations), MPP CFD computing is not so secret at all. In 1996 all of the world’s top 10 supercomputers were MPP systems and were mostly running unclassified CFD codes for weather and aerodynamics modeling, and by 1999 expertise in mapping CFD codes to mesh MPP systems was well established in the scientific world.

so, if a 100 teraflop machine takes 90 days to do a single calculation, does it necessarily mean roadrunner can do it in 9 days?

I disagree with ‘blowback’s’ suggestion that ‘we know all there is to nuclear weapons and this is about modeling new ones’.

Actually, we don’t know all there is to the degradation of nuclear components over t in the US arsenal.

We will (continue to) be able to model the results of degraded components..why object to that? And, so what if we can improve weapons designs with the same technology without setting off a live nuke?

The CTBT has done little, if nothing,to stop weapons proliferation or improvements in weapons designs

Philip Hunt:

I agree the graph should have presented as a log format—but the linear graph is far more sexy.

@Carey

Part of the deal is there are only certain classes of problems that are amenable to parallelization. Yes, the basic software is pretty open, but the alogarithms, database and, especially, the variance data between model prediction and actual (some of which can be done without a nuclear explosion or with sub-critical masses that do not fall under the test ban), are pretty closely guarded.

@House

If you have vast stockpiles of depleted uranium that you can’t find a use for, you can get pretty close to a great set of data by doing test explosions using depleted uranium. The physical properties are pretty close to the real McCoy and some astute tweaking would get you close without violating the CTBT.

There is another route to obtain this knowhow, called the “back of the book” method that involve looking at enough designs and then working backward.

It is not known if this method was used. Though in order to do it properly, they would have had to start with a simulator with at least rudimentary data from a few real tests. I have my suspicions as to who did it this way —- look who hasn’t tested much or tested…

@FSB

Talking about reliable replacements… It is going to be interesting if the next administration is interested in finding RRWs like Dick and Don that will either have to clean up after (Somalia style) or start a new game with Iran.

The gold and commodity markets, unreliable as they may be, are signaling war.

@Andrew

Concur that is the bottleneck. However, if you are trying to get mflops on the cheap, you cannot buy raw processors much cheaper than via PS3s, which are subsidized by Sony. There are ways to get around both the memory and bandwidth limitation, at a cost in performance by some astute programming, or, if you really want, get out the soldiering iron and start tweaking the board, but it can be done.

It is hardly suprising that a treaty not yet in force has had little effect.

J House – if you are going to quote me, please at least cut and paste what I wrote!

I did not say “we know all there is to (know about) nuclear weapons”, all I said is that enough is known. That is a very substantial difference as no-one will ever know all there is to know about nuclear weapons, there will always be Rumsfeld’s infamous “unknown unknowns”.

Plutonium 239 has a half-life of 24,110 years and the US has close to 100,000kg of weapons-grade plutonium, there should be no problem for the US in maintaining a credible deterrent for the next few thousand years.

It is known that plutonium pits have a shelf-life of at least fifty years, after which radioactive decay makes them less reliable. Since the decay products of the isotopes of plutonium commonly found in weapons-grade plutonium are uranium and neptunium, the pits can be comparatively easily reprocessed to extract the remaining plutonium for manufacture of new pits. So the US could continue to maintain an effective deterrent for the foreseeable future using existing designs and existing manufacturing techniques if it wanted to.

So this is all about developing new warheads!